The government has banned this year’s Pride parade on the grounds of child protection, denying LGBTQ+ people who promote “anti-child” values the right to assemble (which is otherwise protected by the constitution). The government is trying to lend credence to its intimidating tactics by threatening to use facial recognition technology (FRT) against those who participate in the event, which sounds very scary, partly because it is a form of the technology you’d have to be living under a rock not to have heard about: artificial intelligence.

Is it really dangerous to go to Pride this year?

This issue is discussed from a legal perspective in an article by the Hungarian Civil Liberties Union (TASZ/HCLU), which describes the legal implications as not being a serious concern (Budapest Pride gives a detailed account of the legal situation in this article). However, these articles do not really examine how the FRT works and how EU laws might affect its legislation and application. I therefore contacted experts in the field of law and FRT and organised an online meeting to discuss these issues.

A recording of the event is available in English on the website of the organiser, the AI Policy Lab at Umeå University, Sweden.

The panellists are Angelina, Assistant Professor of Computer Science at Cornell University in the US, a researcher on responsible AI and an expert on FRT, and Matilda, Professor of International Law at the University of Gothenburg in Sweden, an expert on the relationship between AI and law.

Facial recognition technology

There are two main types of technology: one-to-one and one-to-many mapping. The former involves matching a captured image of a single person to an image of another single person (for example, at border control, where a photo of you is used to check if the picture in your passport is really yours). This technique is very advanced, but the task is simpler. Pride is a case of one-to-many mapping, where you would need to compare the images of many people in, say, a police recording or security camera footage with images in the police database, without knowing in advance who you are looking for. Angelina stressed that this is a much more complex task on a technical level, so misidentifications are more likely to occur. That said, Angelina pointed out, research has already shown that FRT has evolved a lot, so it cannot be trusted not to be able to correctly identify a person.

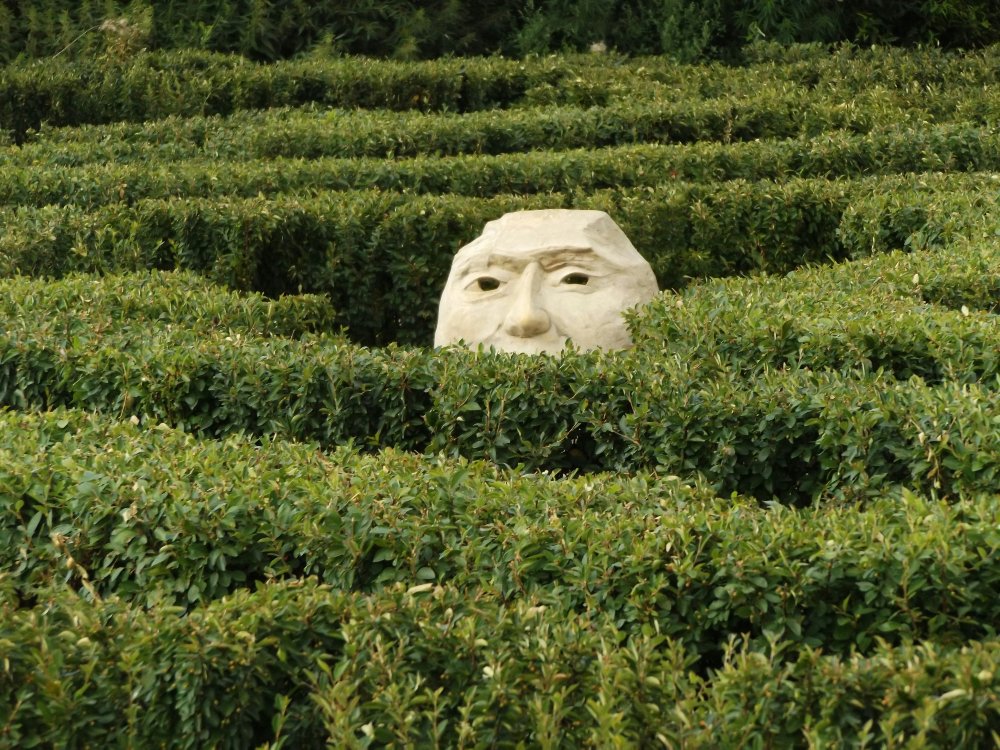

When asked about the weaknesses of the technology and how it could possibly be deceived, Angelina said that FRT works primarily with shapes, using facial features such as the shape of the nose, eyes or mouth to recognise a face. Therefore, shapes that obscure the face, or altering the shapes with makeup, can help to avoid recognition. Altering the colour of hair or skin with paint is less promising – although Angelina says that none of these means guarantee that the technology will not recognise us. And I would add that, according to the HCLU statement, wearing a mask can lead to criminal prosecution.

Therefore, if we want to follow Angelina’s advice to cover our faces, we might want to give drag makeup a try, which is in keeping with the spirit of Pride anyway, and which can contribute to changing the shape of the eyes, mouth, nose, without resorting to a mask. However, it should be noted that, from a technical point of view, the best chance of deceiving the technology is to conceal or cover up the shape of the face – which is obviously why the law was designed to make this impossible.

And what about the legal status in the EU?

Matilda unfortunately did not paint a very optimistic picture of the law’s ability to protect us. Two separate branches of EU law apply to this situation. The first is the recently passed AI Act, which defines who can use various AI technologies and how. Unfortunately, the use as formulated by the Hungarian government, despite being directly and purposefully aimed at intimidating a minority, does not contravene this law unless it’s applied right there and then at Pride to identify people. I, for one, highly doubt that the facial recognition would be done there on the spot, but obviously retroactively, at some company or office – a long and cumbersome process, according to the HCLU. So, referencing the AI Act cannot be used in any practical way to oppose the use of FRT.

By contrast, EU fundamental human rights, as laid down in the European Convention on Human Rights (ECHR), prohibit discrimination on the grounds of sex or sexual orientation, and the unequal application of the law on such grounds. Matilda therefore pointed out that a person who is penalised for participating in Pride (whether or not identified by facial recognition technology) can take this case to the European Court of Human Rights on the grounds that they have been subjected to discriminatory treatment, as their right to assemble is being denied because of their sexual orientation. Unfortunately, however, Matilda has not been very optimistic about this procedure either, both because it is lengthy and complicated and cannot really be managed without the support of a lawyer or other legal assistance, and because EU Member States’ legislation and the application of the law can and do diverge from the principles laid down in the ECHR, and there is often no adequate remedy.

So what shall we do?

At this point, it became clear that there is no clear, guaranteed protection against these repressive measures, either technologically or legally. Matilda argued in favour of solidarity: the more we show up, the less the threat to individuals, because it becomes more embarrassing to launch a political attack against a bigger crowd. The participation of politicians is particularly important and beneficial in this respect, because heads of state and MEPs who intend to participate in the march in Budapest together with an EU Commissioner all enjoy immunity and therefore are not risking anything. In response to my question, Matilda also suggested that if you work for a company where equality is a priority, participating as a company or having its support might help a lot, since individuals are more vulnerable than a company.

For her part, Angelina stressed the importance of thinking about what data, especially pictures, we make available about ourselves online. Facebook, WhatsApp and other social media are not trustworthy hosts of our data: they regularly share or even sell it, making it easier for facial recognition technology to do its job. Instead, Angelina recommended an open-source app called Signal for communication, which works in the same way as the above, but because it is open-source, meaning that its software is open and available for review, it is demonstrably private about our data, not sharing our photos with other apps or companies. It’s also worth thinking about whether we should take photos ourselves at an event like this, as it could be used as evidence against ourselves or our friends.

If we compare these opinions with the recommendation and the legal brief of HCLU, my hope is that we can be more assured about participating in Pride, since we can only shake oppression through resistance and mass expression.

And a special thanks to the AI Policy Lab and the colleagues at the Lab, especially my organising partner Petter, for making the event possible!

Translation by Zsófia Ziaja